Prior to making the acquisition of a multiplayer monitor, it is important to give G Sync HDMI and FreeSync equal weight in your considerations. Both of these technologies improve the performance of displays by coordinating the capabilities of the screen with those of the graphics processor.

And it goes without saying that each choice comes with its own set of advantages and disadvantages. Ghosting is one of the screen irregularities that can occur with FreeSync, whereas G-Sync, which costs more but offers improved functionality, does not have this issue.

FreeSync Vs G Sync — how do I know which one is the best?

FreeSync VS G Sync are two of the most notable instances of the new standards that developed in conjunction with the quickening pace of technological development.

V-Sync was one of the earliest standards established to address the difference between the manufacturers of graphics processors and display devices. It was developed as a means to prevent tearing in video games.

Let’s talk quickly about V-Sync before we get into a more in-depth explanation of the technologies that are behind G-Sync and FreeSync.

What Is V-Sync?

Display technologies are referred to by the abbreviation “V-Sync,” which is an acronym that stands for “vertical synchronization.” Its primary objective is to provide assistance to monitor manufacturers so that screen fracturing could be avoided. This occurs when the display rate of the monitor is unable to keep up with the data that is being sent from the graphics device, which causes two different screens to collide with each other.

An Explanation of How V-Sync Operates?

When it comes to the actual gameplay, this proves to be quite helpful. For example, the average frame rate for a video game on a computer is sixty frames per second. A refresh rate of between 120 and 165 frames per second is required for the monitor because the vast majority of high-end games operate at 120 frames per second or greater. If the game is played on a monitor with a refresh rate that is lower than 120 hertz, there will be difficulties with the game’s ability to function properly. This problem is remedied by the V-Sync technology, which places a hard limit on the amount of frames that can be displayed in one second.

V-Sync was developed to work well with earlier displays; however, it frequently prevents modern graphics processors from functioning at their maximum potential. A multiplayer monitor, for example, will generally have a refresh rate of at least 100 hertz (Hz). If the graphics card is configured to generate content at low frequencies (for example, 60Hz), then V-Sync will prevent the graphics card from operating at its peak level of performance.

Also Read:

FreeSync Vs G Sync – Detailed Analysis

A variety of new technologies have emerged in the years following the development of the V-Sync system. These technologies, such as G-Sync and FreeSync, improve display efficiency and picture elements like screen sharpness, image hues, and luminance.

In light of the aforementioned considerations, I would like to provide you with a more in-depth analysis of the freesync vs g sync standards so that you can choose the monitor that is best suited to meet your requirements.

What Is G-Sync?

NVIDIA created the technology known as G-Sync, and in 2013, it was made accessible to the broader public. It does this by synchronizing the display of the user with the output of the graphics processing of the device, which ultimately leads to an improvement in performance overall, specifically when gaming.

The G-Sync Mechanism?

G-Sync is a feature that ensures the graphics card will adjust its output rate automatically in the event that the performance of the GPU is not in sync with the display rate of the monitor.

For example, if a computer device has the ability to generate fifty frames per second (FPS), then the refresh rate of the display will be changed to fifty hertz (Hz). In the event that the number of frames per second (FPS) falls to forty, the display will quicken to a frequency of forty hertz. G-Sync technology features a functional range that generally begins at 30 Hertz and continues all the way up to the display’s maximum refresh rate.

The primary advantage of employing technology that was developed by G-Sync is that it eliminates screen separation as well as other common display problems that are associated with V-Sync hardware.

Also Read:

What is G-Sync Ultimate?

In order for NVIDIA to keep up with the rapid technological developments that are taking place, the company has developed a new and improved version of G-Sync, which they call “G-Sync Ultimate”. The primary characteristics that set it apart from other G-Sync-compatible hardware are the:

- built-in R3 module,

- support for high dynamic range (HDR), and

- the ability to display 4K definition pictures at 144Hz.

Even though it delivers exceptional performance in all applicable domains, G-price Sync’s tag is the most significant disadvantage. Before users can make full use of native G-Sync technologies, they will need to make an investment in monitor and graphics components that are both G-Sync-ready. Because of the requirement, the number of G-Sync products from which they could choose was substantially limited.

What Is FreeSync?

In 2015, the AMD-created FreeSync standard premiered, providing another customizable synchronization option for LCD displays. The purpose was to reduce the effects of a display device’s inability to keep up with the frame rate of a video, such as distortion and latency.

The Mechanism Behind FreeSync

FreeSync relies on the Adaptive Sync standard introduced in the DP 1.2a protocol, so all you need is a monitor with that interface. This implies that FreeSync can’t be used with outdated visual ports like VGA or DVI.

Drawbacks of FreeSync

While FreeSync is unquestionably better to the outdated V-Sync standard, it is not without its flaws. Ghosting is a major problem with FreeSync. This is the eerie effect created when an object enters and exits the screen, leaving behind a portion of its original visual position.

A major cause of ghosting in FreeSync hardware is inaccurate power management. If the images are moving too slowly because not enough electricity is being sent to them, the result is an image with visible gaps between them. However, ghosting occurs under extreme conditions.

What is FreeSync 2?

2017 saw the introduction of an improved iteration of AMD’s FreeSync technology, which was given the moniker FreeSync 2 HDR at the time. This was done to bypass program constraints. Displays that are compliant with this standard are expected to have:

- high dynamic range (HDR) support,

- low-frequency compensation capabilities (LFC), and

- the ability to change between standard definition range (SDR) support and high dynamic range (HDR) support.

FreeSync Vs FreeSync2

The most significant difference between devices that use FreeSync and those that use FreeSync 2 is that the latter technology automatically enables low framerate compensation (LFC) to prevent stuttering and tearing in the event that the frame rate drops below the range that the monitor is capable of supporting.

Also Read:

- Can You Use A Nether Portal Calculator To link Multiple Portals Together In Minecraft?

- The Role of Cooling in Building a PC: Tips and Tricks

- Use of AI in Video Games

Freesync Vs G Sync – Which is Better?

If the overall functionality and picture clarity of the monitor is the most important factor for you when selecting one, then the hardware for G-Sync and FreeSync is available in a wide variety of configurations to accommodate almost any requirement.

Ripping

The amount of input delayed or ripping that is authorized by each specification is the primary determining factor in which of the two formats is superior.

Latency

The FreeSync standard is an excellent option to take into consideration if you want the ultimate lowest possible input latency and don’t mind having some blurring in the experience of playing video games on your screen.

Motion

While G-Sync-enabled displays are an excellent option that you should think about considering if you place a high priority on smooth motion that is free of tearing and are willing to tolerate some input latency in exchange for this advantage.

Industry Standard

The content that is made available by both G-Sync and FreeSync is considerably superior to the kind of media that is regarded as the industry standard by consumers and professionals working in the business.

Cost

If money is not an issue for you and you have a mandatory prerequisite for top-of-the-line assistance for your graphics, then G-Sync is the best choice.

Do G-Sync And FreeSync Work With HDMI?

Players have reported issues such as screen deformation and an ever-increasing degree of input latency ever since the implementation of high refresh rate gameplay. This problem has become especially widespread in first-person shooting games in recent years.

In order to address these problems, NVIDIA and AMD have developed various technologies, such as G-SYNC and FreeSync. These technological advancements are being developed with the goal of mitigating the issue that has been recognized. On the other hand, this raises the question of whether or not HDMI can be used in conjunction with G-Sync and FreeSync.

Also Read: is hogwarts legacy a playstation exclusive

FreeSync and G-Sync both work with HDMI, but they differ in several ways. There is a much wider variety of HDMI requirements that are compatible with the AMD FreeSync technology (1.2 or greater.)

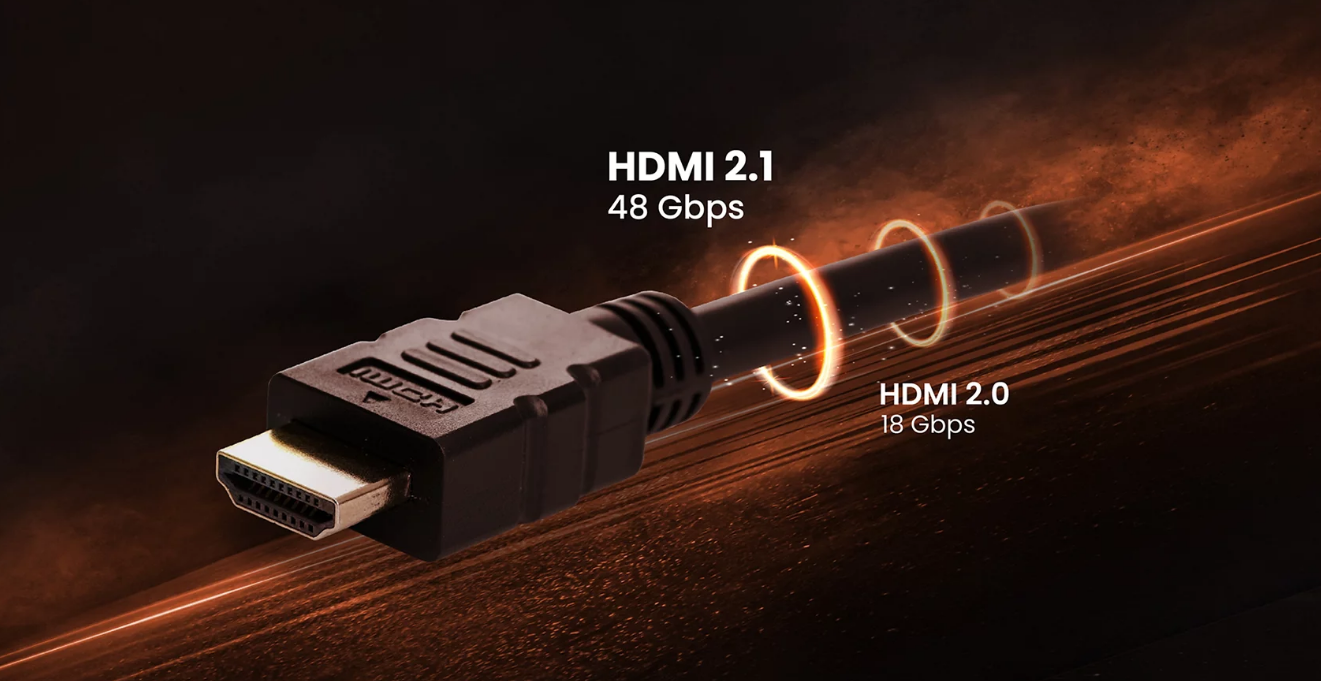

On the other hand, in order for NVIDIA G-SYNC to function over HDMI, you will require a graphics card from the RTX 3000+ Series in addition to a monitor that is able to support HDMI 2.1+.

Does G Sync Work With HDMI?

One question that often comes to mind is Can you use GSync with HDMI? It is essential to be aware that not all HDMI specifications are functional with the G-SYNC technology. If your screen doesn’t have an RTX 3000 series graphics processor, or if it doesn’t support HDMI 2.1, G-SYNC won’t work.

If a television is going to be your main gaming monitor, then you’ll probably want G-SYNC compatibility built into HDMI from the get-go. The reason for this is that the de facto standard for high frame rate gaming and the syncing of such games, HDMI, is not typically included in TVs.

The simple truth that some HDMI standards do not provide enough capacity to enable G-SYNC is the reason why they do not support G-SYNC. Adjustable frame rate (G-SYNC) over an HDMI link wasn’t possible until HDMI 2.1 was released. You can play back 4K video at 120 frames per second with HDMI 2.1, and 8K video at 60 frames per second with HDMI 2.1.

DisplayPort has never been able to contend with HDMI in terms of its data transmission capacities. This is why the overwhelming majority of graphics card manufacturers have integrated cutting-edge technologies like G-SYNC into that connector.

How To Enable G-SYNC On HDMI Port

If you want to learn how to enable G-SYNC on HDMI Port, you’ll need the following:

- The newest software installed, and a graphics device from the Geforce RTX 30 Series.

- An HDMI 2.1 link with a certified bandwidth of 48 Mbps.

- A Screen that supports HDMI 2.1.

Steps On How to Enable G-Sync on HDMI Port?

- You need to install a graphics device from the GeForce RTX 30.

- When you’re set, use an HDMI 2.1 cable to link your graphics device to your display.

- Now is a good time to boot up your computer and see if there are any available program upgrades.

- Start up the display’s VRR and HDMI 2.1 support, as well as G-SYNC.

- The NVIDIA Control Panel can be accessed by right-clicking the taskbar, where you’ll find options to enable the aforementioned tweaks to your display.

- The G-SYNC settings can be accessed via the Display >> G-SYNC menu option.

- G-SYNC and G-SYNC Compatible must be chosen, and the frame rate and resolution can then be adjusted to personal preference.

- To sign up, please select the registration link.

- Once these modifications have been made, G-SYNC via an HDMI link should function without tearing or stopping.

Also, Read

Does FreeSync Work With HDMI?

FreeSync is guaranteed to work with any and all previous HDMI specifications. If you’re looking to purchase a PC with an AMD graphics card or prefer to play on an Xbox, you don’t have to give much consideration to whether you need to use a DP or an HDMI link. These two options works without a problem with either connection type.

Unlike NVIDIA, AMD’s variable frame rate technology has been enabled for a long time before the existence of HDMI 2.1. Since both the graphics engine and the HDMI link you’ve been using up to this point are capable of enabling FreeSync, you can stop worrying about that now.

Keep in mind that FreeSync, like G-SYNC, necessitates the use of a display that has been tested and found to be compatible with the aforementioned standard. This means that you can’t just put any old monitor into an HDMI port and have FreeSync start working automatically. In order to use FreeSync without causing any problems, it must first receive permission from AMD.

(AMD has carefully compiled a picture that indicates whether or not FreeSync is compatible with a given monitor or device, much to your delight).

A monitor that is connected with HDMI can support both the standard FreeSync technology as well as the FreeSync Premium Pro technology. The most significant difference that can be made between the three tiers is that higher tiers offer greater support for HDR as well as fewer instances of fragmentation and shimmering.

There are currently only a handful of displays on the market that are capable of supporting FreeSync Premium Pro when connected to a computer via HDMI. On the other hand, when it is connected to the display via DisplayPort, support for the standard is available on a much broader selection of monitors.

Because of these limitations, there is a realistic cap placed on the amount of data that can be transmitted over the connection at any one time.

How To Enable FreeSync Over HDMI?

The following conditions must be met before FreeSync can be activated via HDMI:

- have an HDMI cable plugged in (version 1.2 or later).

- Make use of a monitor that satisfies the FreeSync specification.

- Make use of an AMD GPU that supports FreeSync.

The following is a rundown of the steps required to enable FreeSync over HDMI.

Steps On How To Enable FreeSync Over HDMI?

- Right-clicking the taskbar will bring up the AMD Radeon Settings window.

- Once that is finished, click the Display option.

- Displays that support AMD FreeSync will be highlighted in the display selection menu. Click the icon to make sure it’s turned on.

- Once these precise modifications have been made, you may exit the settings box.

Conclusion

With the introduction of HDMI 2.1, NVIDIA has at last taken the plunge into G-SYNC over HDMI, a transition that has been a very long time in coming. In spite of this, AMD continues to hold a dominating advantage over its competitors with regard to the support of frame rate synchronization over HDMI. This is due to the fact that AMD’s FreeSync technology is capable of providing functionality across a variety of different HDMI protocols.

Both of these standards, despite the differences that may be perceived between them, do an excellent job of eliminating screen separation and reducing the amount of input latency. When they are activated, there is an obvious and quantifiable improvement made to both the gameplay and the general operation of the desktop.

Also, Read